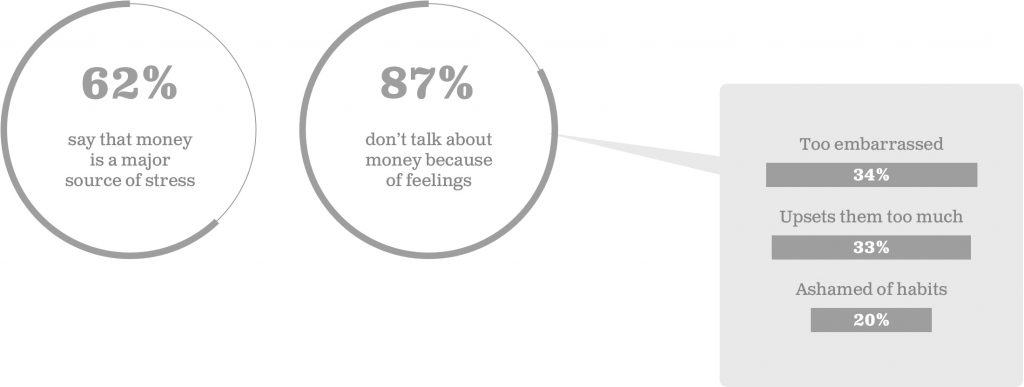

I’ve had the pleasure (and pain) of working in several high-stakes industries like healthcare and finance, and one truth rings out across any high-stakes scenario: people get super emotional. Unsurprisingly, they particularly get emotional when it comes to their life or livelihood. However, it’s interesting to note that, from a product design perspective, we don’t often address those user emotional states in our design. But the market is begging for emotional help. As an example, in finances, 62% of the growing market says that money is a stressor and 87% of them claim feelings as the reason they don’t talk about it.

At this point you might say to yourself: OK. I could address this gap in any number of channels, but if 87% just said they don’t want to talk about, why would I consider a channel whose entire interface is conversation? The secret here is wrapped up in a 2014 study done by the University of Southern California. Researchers brought in about 200 people to interact with a therapy bot they’d built and divided them up into two groups: one which was told the bot was entirely automated with no human interaction or oversight, and another which was told the bot was semi-automated and a human would be operating it remotely. The participants who thought they were talking to just the bot were far more likely to open up and reveal their deeper, true feelings.

What we learn from this and other research similar to this, particularly in the mental health and addiction spaces, is that people see bots as a “safe space” where they can share ideas and feelings without fear of judgment or bias perceived in human interactions.

Tips for Creating a Successful Emotional VUI Experience

How do we create a successful voice experience in an industry we know to be highly-emotional for our users? Well, particularly if it’s your first foray into the space, focusing on bringing user emotions to the forefront in 3 key areas will get you most of the way there.

1. Scope. Knowing what makes for a good use case in voice (and what doesn’t) is key to using the channel to its strength and getting customers to adopt the experience. It is also key to ensuring you’re using voice in the best way for a stressful situation.

For example, let’s say you have a user who runs 20 pet stores around the 5 boroughs of New York and is constantly in their car visiting all their locations. User research may tell you that they are quietly panicking and getting increasingly stressed out with staying up-to-date with profit-and-loss sheets but don’t have the time or ability to in-depth review a bunch of spreadsheets, particularly when they’re out on the road. A voice experience addressing emotions in finance here might look like a skill which eases concern and builds confidence by pulling the data for a particular pet store location which they can access in the car while on their way to that location. A health bot that has thoroughly scoped where they will and won’t help is Woebot, a behavioral cognitive therapy bot for people who need some extra emotional support.

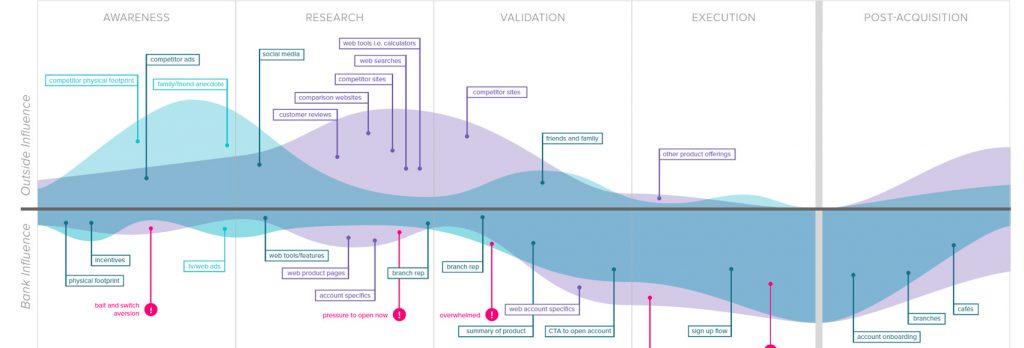

2. Context. You can’t design for emotions without knowing the emotional context of your users, so the best way to understand this is with in-person user research (methods like contextual inquiry, ethnography, etc). As you gather your data, plot it out in a journey — even better if you can co-build this with your user. Include the order of operations, methods for making decisions or taking actions, and the feelings people have in each moment.

Analyze what’s happening around the moments that create the emotions and ask people where they wish they’d have a guide or friend to talk them through. By doing this you can identify both areas where things are going well and you can potentially replicate or areas where more support is needed. The Wolters Kluwer/Emmi Diabetes Support system is an example of a multimodal voice experience that handles user emotional context really well. (Bias alert! I was on the team that created that system.)

3 Interactions: Tone Consistency. In voice experiences, the interactions are the words and behaviors from both the system and user. At the point of scripting and designing these interactions, it’s important to bring in your marketing team, UX writers, content strategists and anyone else in the organization who may be communicating to your users. It’s imperative that you have a unified voice in the tone and words you’re using in print and in audio. Having multiple speakers or personas creates confusion for users on who your brand is and the values you represent in a good state. The more emotional people become, the less patience they have for confusion and the more abandonment you’ll see.

3a. Interactions: Applying Context to Words. It’s also important to apply the emotional context you learned about in the user research to your interactions, which in voice, is mostly about the words you use. For example, if you learn that people filing a life insurance claim only do so when someone close to them has passed away, incorporate that emotional context into an automated call center you might design in that space. Seems obvious, but you’d be surprised at how many times that kind of sympathy is left behind. Addressing your users with just a bit of compassion and acknowledgement of their emotional context goes a long way in both calming your user’s negative emotions as well as distinguishing your brand as one who truly understands and cares.

Addressing All Emotional States

There are plenty of emotional scenarios in everyday life. You don’t have to address every single one that might possibly come up — and honestly, you shouldn’t. There are scenarios that humans are simply better at handling than bots (for example, you don’t want a bot giving financial guidance and advice — the emotional landmines alone are too nuanced for a bot to handle). And that’s OK. When there are nuanced or complex scenarios that we simply don’t have the technological or design prowess to handle yet, leave it to a person. The idea behind automating with voice and chat is to assist in the easier transactions or in situations where a human interaction could impede the experience or information needed, not to make every single conversation automated.

For those use cases you do tackle, doing some research into the general emotional context of your users’ journeys, planning for those emotions in your the language and behaviors of your interactions, and acknowledging emotional context creates an experience that people trust and want to return to.